Part 1 - Securing AI use in your organization

Cyber risk management when AI is present

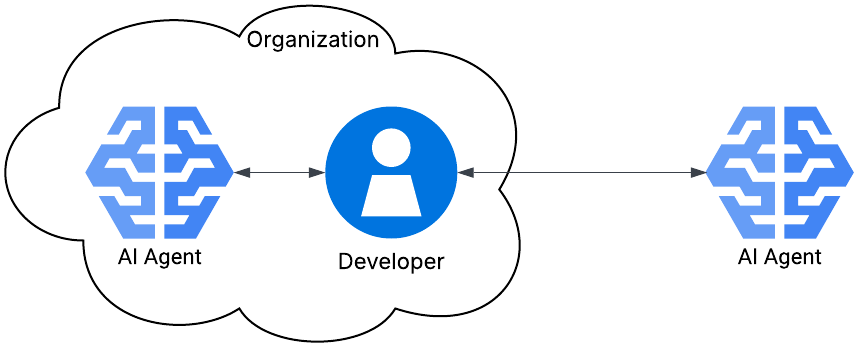

The AI use case that organizations are most likely to face today is adjusting their cybersecurity risk management to account for the presence of AI. It’s the second of the four use cases defined in my previous article and is illustrated in the diagram.

In this use case, there’s an organization of some sort with employees. The employees include developers seeking to take advantage of the benefits of AI Agents. The developers could also be termed “users” since I’m not discussing the development of the actual AI Agent, but the use of the deployed AI Agent. There are two possible scenarios: the developers are using a static AI Agent which has been deployed within the organization, or they are using an AI Agent which is available outside of the organization.

The typical use case for either of these scenarios is that the developer will issue a prompt to these AI Agents and receive a response. These interactions are represented by the arrows in the figure above. I’m going to expand on this figure to demonstrate typical information flows and what risks arise from them.

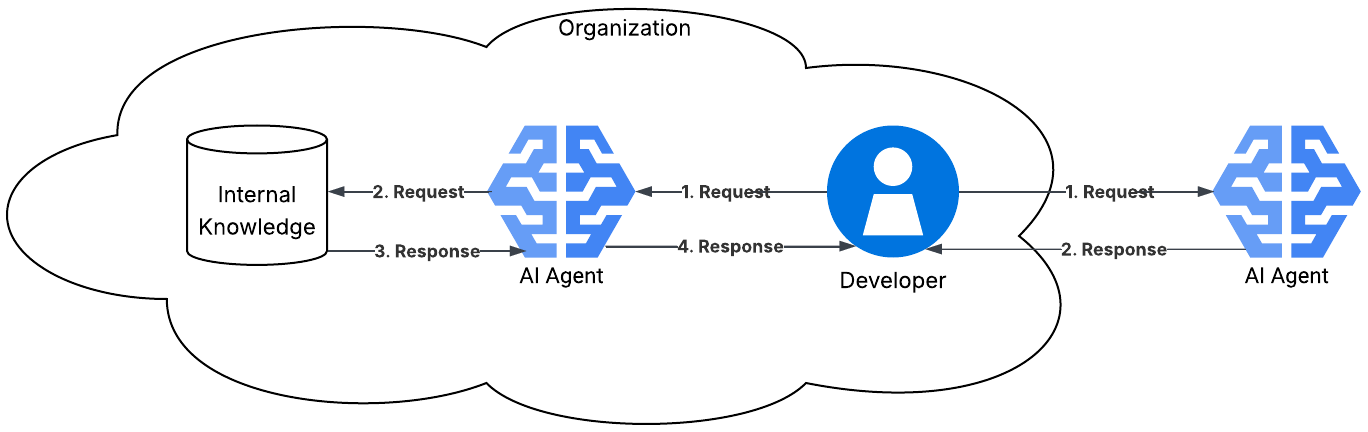

The figure below includes a database of organizational knowledge. This database may contain FAQs, business processes, data files, etc. This type of system where an AI Agent has access to your internal (or other) data and uses that data to make better decisions is called retrieval augmented generation (RAG). RAG systems are common in modern AI Agent architectures, as they help the agent produce better results. This architecture can be expanded to include other AI Agents. The main concept is that the AI Agent is using other tools in order to make better decisions with the request it was given.

As you can see in the more detailed figure, there are many requests and responses issued to and from AI Agents. Each of these arrows presents risks. Risks, for the purposes of this article, are defined as loss of integrity, confidentiality, or availability (classic cybersecurity triad).

I’m going to use a slightly different setup than the traditional “Risk = likelihood x impact.” I am positing that risk is event-based (which is not new; see definitions of risk in ISO standards). Specifically, I’m positing that within cybersecurity, there are nine risk events, the product of (lose, maintain, gain) x (confidentiality, integrity, availability). Notice that there are negative, neutral, and positive risk events. This setup allows for calculating risk tolerances based on costs in future steps.

Furthermore, in order to realize a risk (e.g., lose availability), an organization must have a method of compromise applied to it AND be susceptible to that method of compromise. Only through meeting those two conditions is the risk event realized. Organizations can then use these conditions and risk events to determine impact of an event.

I’m going to use the lack of controls as a susceptible condition, and then talk about controls which may help an organization prevent a method of compromise or mitigate the impact of a realized risk event.

Risk Identification and Analysis

For the purposes of the following risk analysis, I’m going to assume that the AI Agents have no write access to anything within the organization (no file system, cloud, email, etc.). There has already been a tremendous amount of research demonstrating that write access for AI Agents poses significant risk to organizations. While having AI Agents with write access to enterprise assets may be beneficial, they must be extremely well-understood and well-protected. The casual user will not be able to architect and protect these systems with limited resources. Therefore, I’m assuming an already heightened risk posture against AI Agents to eliminate entire classes of methods of compromise.

For every request that is present in the figure, the following methods of compromise are relevant:

Prompt injection: This method of compromise utilizes an intentional man-in-the-middle type of attack to insert additional language into the prompt in order to achieve a different outcome than the original request. Integrity of that information is compromised.

Data leakage: This method of compromise could be intentional or unintentional use of personally identifiable information (PII) or sensitive organizational data within a request. Confidentiality of that information is compromised.

Denial of service: This method of compromise involves sending a large number of illegitimate requests to the AI Agent with the purpose of overloading its processing capability, thus rendering it inoperable for legitimate requests. Availability of that information is compromised.

For every response that is present in the figure, the following methods of compromise are relevant:

Insecure AI Agent: This method of compromise involves an AI Agent that has been distributed with features which are not in alignment with the organization. Thus the response will contain information that may be harmful to the organization or others. Integrity of that information is compromised.

Hallucinations: This method of compromise involves an AI Agent sending back information within the response that is not factually correct. NIST AI 600-1 refers to this behavior as “confabulation.” The term “hallucination” is a colloquial term and hides the underlying source of this method of compromise (AI Agent training and development). Tell us in the comments if you prefer the term “hallucination” or something else. Integrity of that information is compromised.

I have decomposed the use case into its component parts, methods of compromise. Since there are no controls against these methods, there would be a realized risk of degraded confidentiality, integrity, or availability. I use the classic cybersecurity triad here to ground our analysis in the cybersecurity domain rather than generic risk management. Furthermore, these risk events will have an impact associated with them. Your organization can then use impacts with the below responses, costs, and risk tolerances to determine appropriate actions.

Risk Responses

Given the risk event and impacts, what can be done to prevent or mitigate the realized risks? The list of responses below is not exhaustive but a good starting point for an organization seeking to mitigate risks. Additionally, the costs of these risk responses vary. Depending on the risk events defined above, an organization can weigh the costs of the response against the impact of the risk event in whatever manner is best for the organization.

For prompt injection:

Never copy and paste prompts from untrusted/unknown sources.

Ensure only authorized and authenticated users are making requests.

Validate and sanitize all user inputs before they reach the AI Agent.

Use structured formats that clearly separate instructions from user data.

For data leakage:

Validate and sanitize all user inputs before they reach the AI Agent.

Monitor AI Agent requests for anomalous behavior.

For denial of service:

Implement rate limiting of requests based on known processing capabilities.

Implement rate limiting of requests based on known token or request caps.

Implement load balancing.

For insecure AI Agents:

Create clear requirements for AI Agents before deployment.

Monitor responses for inappropriate content.

For hallucinations:

Validate and sanitize all AI Agent responses before they reach the user.

Provide clear disclaimers to users about the limitations of AI Agents.

Allow the AI Agent to say “I don’t know” in prompts.

Ask for “chain of thought” in prompts.

Ask for citations for sources in prompts.

Conclusion

I have stepped through the use case where an organization has deployed its own AI Agents or uses an external AI Agent. I have identified and analyzed the cybersecurity risks associated with the use case as well as provided some actionable risk responses. Keep in mind that I have taken a fairly technical approach to this writeup. There are many other human-based controls which could be implemented to shore up our risk mitigation strategies. If you have other ideas on how to identify, analyze, or respond to these risks, please drop us a comment!

Excellent article! Thanks for posting!

Great article!