Part 0: Cybersecurity when AI is present

Updating our understanding of risk in the agentic era

Having tools perform tasks for humans is a key piece of our evolutionary tale. From rocks to metal to silicon, we have adapted the material world around us to suit our wants and needs. We are now entering a new stage of this process: having tools adapt the digital world around us. A key difference here is who is doing the work; in the past it was humans, and now it is Artificial Intelligence (AI) agents.

These agents are based on Large Language Models (LLMs), a form of machine learning or AI. For the purposes of this post, we’ll be using agents to mean some implementation of an LLM. There are many excellent resources on what LLMs are, how they work, and how to use them to accomplish tasks. The focus of this post is how to scope the cybersecurity risk of environments where AI agents are present. We will leave the identification, evaluation, prioritization, and response strategies for another post.

In order to fully manage cybersecurity risk when AI is present, we have to enumerate the different ways in which AI can be present. As a college professor ingrained into me, the state space must be mutually exclusive and collectively exhaustive (MECE). With that in mind, I’m going to propose what I think is a MECE breakdown of the ways in which AI can be present in a cybersecurity risk world. If you have additional use cases, please let me know in the comments.

The capabilities and functionality of AI agents are growing and changing by the day. Therefore, it is critical to derive time-independent categories which can be used even as the technology evolves. The categories I’m proposing, which are discussed below, are:

Category 1: Securing AI models

Category 2: Securing AI use in your organization

Category 3: Using AI for cyber defense

Category 4: Using AI for cyber offense

Category 1: Securing AI models

From a system development perspective, there are fundamental risks which can only be addressed at the LLM level. Building a trustworthy LLM is difficult and requires a diverse team to understand the full scope of the model under development. Risks related to bias, explainability, transparency, safety, and privacy are only functionally solvable by the people who build and train the LLMs.

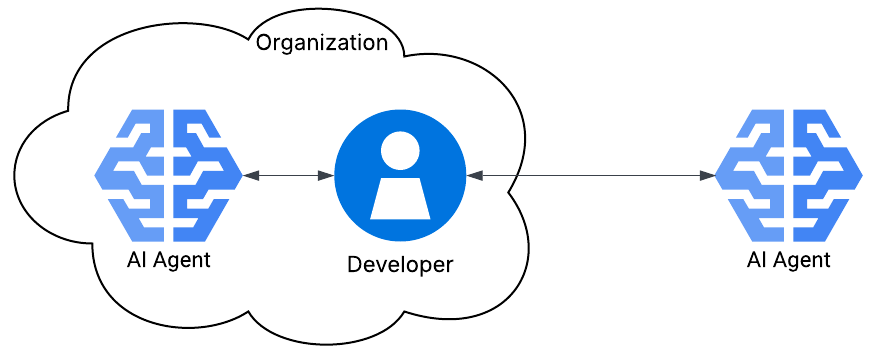

Category 2: Securing AI use in your organization

Many organizations are deploying AI agents and features into their networks, processes, and workflows. These agents can be simple tool use, such as logging into ChatGPT and asking for ideas, or as complicated as agents automating workflows with seamless tool and resource discovery. Whatever the implementation level, organizations need to consider how to secure the AI they are interacting with on a day-to-day basis.

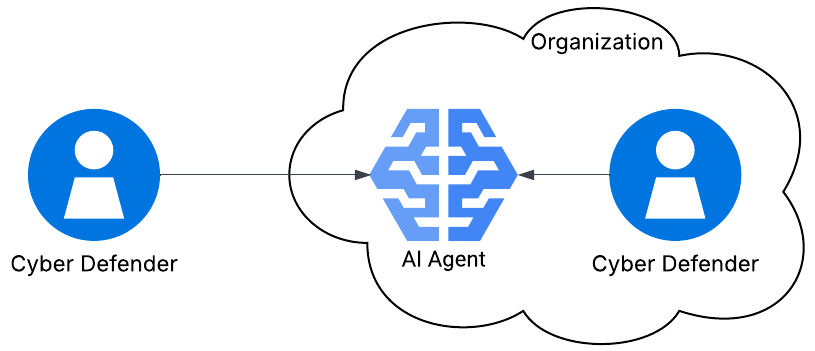

Category 3: Using AI for cyber defense

This use case has similarities to the previous use case. Namely, the AI agent is deployed within the network. However, there are enough distinguishing characteristics that it deserves its own use case. Specifically, when organizations utilize AI agents in their cybersecurity defense programs, those agents may be deployed as 1st, 2nd, or 3rd party. Also, the types of data that the agent will have access to are significantly different from the previous use case. There are many ways in which organizations can use agents to automate and streamline cyber defense: log analysis, anomaly detection, event triage, automated response, compliance review, etc.

As with the previous use case, there are inherent risks to using these tools; however, the risks differ based on the purpose of the tooling. For example, the previous category may be concerned with handling data leaks, whereas this category may be concerned with reducing false negatives when analyzing event logs.

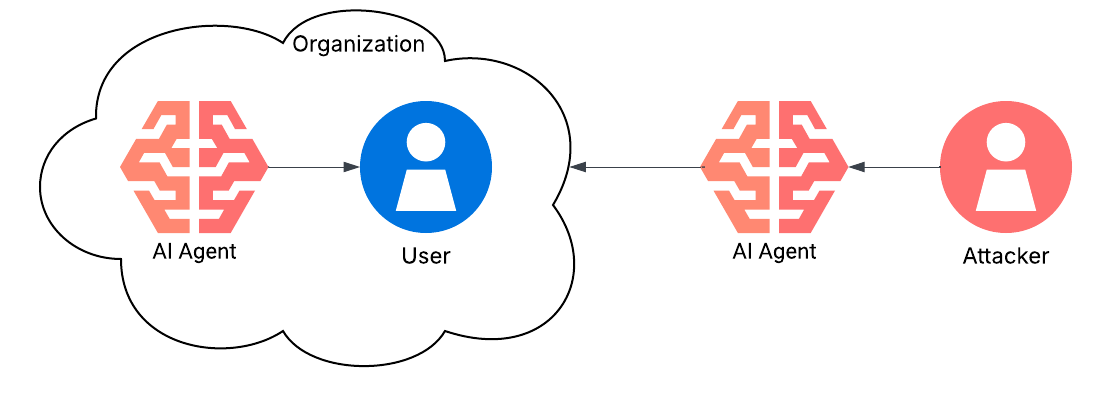

Category 4: Using AI for cyber offense

The final use case is an exercise in knowing your opponent. The use of AI agents to drive the volume, veracity, velocity, variety, and value of data has pushed red team vs blue team to new levels. Phishing attacks are more believable and DDoS attacks are more potent, and the rise of AI agents as threats themselves has turned cybersecurity risk management into a multi-theater operation. For example, the number one penetration tester right now is an XBOW AI agent. Furthermore, there is groundbreaking research coming out of Anthropic, Amazon’s AI arm, which details their findings on AI blackmailing users under specific conditions. Whatever the attack vector, AI is demonstrably accelerating innovation in the offensive cybersecurity space. Therefore, cybersecurity risk management will need to adapt even faster.

Next steps

Now that we have segmented the intersection of the cybersecurity risk management and AI space, we must identify, evaluate, and prioritize the risks. Furthermore, we have to develop risk response strategies for those risks. We’ll discuss the next steps in future articles and update this space to link to those articles.

(No AI labor was knowingly used in the creation of this post.)

A really clear – and dare I say simple – overview about the intersection of cybersecurity and AI. I look forward to your next posts.....